If a musical piece has only chords written and no melody, does adding a random melody ‘on the fly’ during a performance constitute improvisation? Maybe. I thought so before this forum but now I realise it could be considered a composition since a framework, ie the chords, were already in place. I still like to think the idea of randomness is separate from improvisation. Certainly related, but separate nonetheless. This is all an interesting paradox, but one my brain can do without at the moment. My idea of improvisation is being given a task to do and making it happen with whatever limited means are available at that given time. That could mean to play a musical piece without any sheet music, to record a performance with limited equipment or to break out of prison with no more than a paper clip and a drinking straw.

Thursday 31 May 2007

Week 12 - CC1

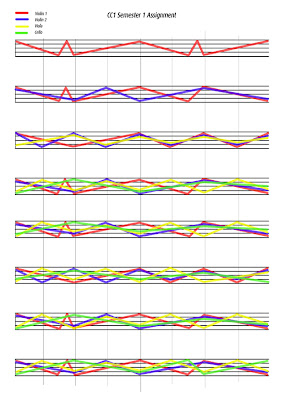

Here's the score for my major assignment. It's pretty simple. The different colours represent different "instruments" while the durations are represented by the length of the lines.

Here's the score for my major assignment. It's pretty simple. The different colours represent different "instruments" while the durations are represented by the length of the lines. Through our tutorials with Mark Carrol I was slightly confused about the definition of music concrete. As far as I can tell music concrete is basically music not made from traditional instruments. Natural ‘worldly’ sounds are used to create music instead, but at the same time although there is usually a lot of tape editing and looping involved these sounds are kept natural. That is my dillema. I am going to use some noises I recorded, but I won’t be keeping them entirely natural. I’ll be pitch shifting and stretching the sounds to create a bizarre string quartet.

I will be doing this piece entirely in Pro Tools using the Speed plugin although the original sounds were recorded on a Marantz CP230 with a Rode NT3. The score uses zig zag lines to illustrate the durations of the notes and to represent the movement and duration of the sounds. This zig zag score could be used for a number of different sound sources. One that comes to mind is hack sawing four metal tubes of different lengths and widths. Hmm, I think I'll do just that over the holidays...

-

Haines, Christian. 2007. “Creative Computing.” Seminar presented at the University of Adelaide, 31st May.

Wednesday 30 May 2007

Week 12 - AA1 - Mixing (2)

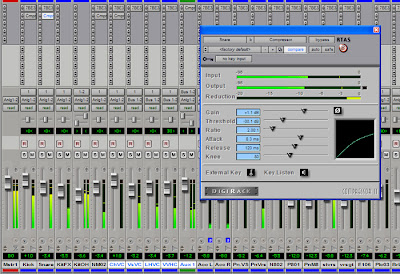

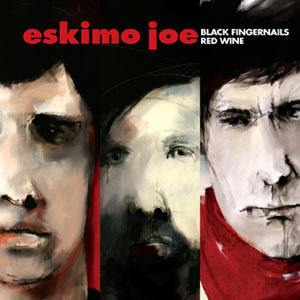

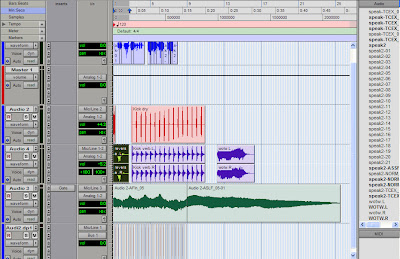

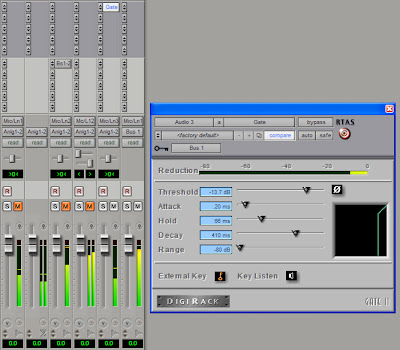

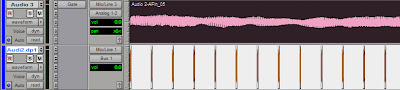

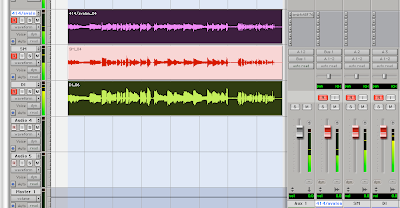

First up I opened the last session from last week. I ended up moving all the vocal tracks over where I could work on them easier since I was only using a small section of the song (highlighted in picture). I applied compression to the kick, snare, bass and vocals. I didn’t think it was necessary to compress everything. I found these parts to really ‘come alive’ after compressing. The three chorus vocals (the one that doesn’t say “stayed in bed” were sent through a bus and compressed together on the one aux as demonstrated in class. This helped keep the cpu strain down.

First up I opened the last session from last week. I ended up moving all the vocal tracks over where I could work on them easier since I was only using a small section of the song (highlighted in picture). I applied compression to the kick, snare, bass and vocals. I didn’t think it was necessary to compress everything. I found these parts to really ‘come alive’ after compressing. The three chorus vocals (the one that doesn’t say “stayed in bed” were sent through a bus and compressed together on the one aux as demonstrated in class. This helped keep the cpu strain down.

For the second mix I added some reverb. The reverb is quite subtle, but I decided to go for that as I feel it’s fairly easy to exagerate reverbs and I wanted to try and use it to blend and glue tracks together as I find that challenging. The only time it pops out as a major effect is with the delay on the phrase “I know.” Again, an aux track was created with the reverb plugin on it for the vocals and snare to be bussed to. The bus faders were used to set the levels.

A quick “mastering” was done for the last mix. I put Maxim on the master fader, set it’s output to –0.1 and lowered the threshold just enough to raise the levels without distorting. Well, it might be distorting as I don’t have the gear a real Mastering engineer would have.

Doing the mix in a step by step process definitely made these mixes much better than previous mixes I’ve done and helped a great deal in finding places for each sound in a busy mix. It’s just a matter of discipline on my part now to keep doing it this way and not go back to fiddling about with compressors before panning, etc.

-

Mastered version.

Fieldhouse, Steve. 2007. “Audio Arts 1 Seminar – Drums.” Seminar presented at the University of Adelaide, 29th May.

-

Digidesign. 1996-2007 Avid Technology, Inc All Rights Reserved.

Fieldhouse, Steve. 2007. “Audio Arts 1 Seminar – Drums.” Seminar presented at the University of Adelaide, 29th May.

-

Digidesign. 1996-2007 Avid Technology, Inc All Rights Reserved.

-

Eskimo Joe. 2006. “New York” Black Fingernails, Red Wine (album). Mushroom Records.

Wednesday 23 May 2007

Week 11 - Forum - Construction and Deconstruction (2)

After reading a few other student blogs I have a fairly good idea of the presentations. Since I don’t like or 'care' for DJ music, I’ll just do an Edward Kelly and completely ignore Simon's presentation as it will just take up valuable real estate in this blog.

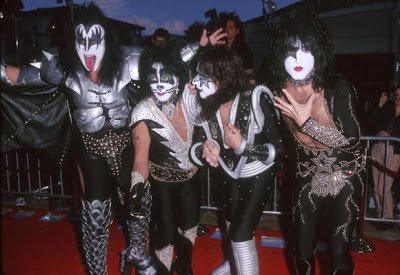

Hmm, Luke Digance’s comments “It was hypothesised that this is related to the writers of the music within the Black Metal genre having a hate for practically every element of modern society.”[1] Now I’m assuming Nathan in fact did allude to this as I make my next statement. This makes me chuckle. Not Luke’s comment, but the statement of “hate for practically every element of modern society.” Now I’m looking at a picture of this band whom I’ve never heard of before and I see them wearing painted faces and black leather costumes adorning studs and spikes. Who do they look like?! Which band has had the biggest influence on what a modern rock concert in “modern society” is presented like?! You wanted the rest, you got the rest. The band that wants to look like the hottest band in the world…WHOEVER!!!!!!!! How can a band that hates modern society take so much from it for their image and try to make it their gimmick? I assume they do not use electric guitars as that is a modern instrument. I would assume I am incorrect.

I’m really dissapointed now that I missed John Delaney’s presentation. Again, I’ll quote Luke, “John came to the conclusion that without the visual stimulation, the music composed has no psychoacoustic result of fear within the listener.” This is exactly what I said last week so it looks like John and myself have come to the same conclusions. It would be very interesting to see what would come out of a conversation between us on the issue of fear in music and whether it could be made possible to create it at will. Judging by the blogs it appears his presentation went a little better than mine. No doubt he’s probably a better public speaker than myself or perhaps it was because he steered clear of words like “formula.”

Whittington, Stephen. 2007. Forum Workshop “Construction and Deconstruction in Music(1)”. Forum presented at the University of Adelaide, 24th May.

Whitelock, Simon. 2007. Forum Workshop “Construction and Deconstruction in Music(1)”. Forum presented at the University of Adelaide, 24th May.

Shea, Nathan. 2007. Forum Workshop “Construction and Deconstruction in Music(1)”. Forum presented at the University of Adelaide, 24th May.

Delaney, John. 2007. Forum Workshop “Construction and Deconstruction in Music(1)”. Forum presented at the University of Adelaide, 24th May.

[1]Digance,Luke. 2007. “Forum wk11" http://www.eclectici.blogspot.com/. (Accessed 26th May 2007)

Week 11 - CC1 - Meta (2)

This time I got around the full bookings of Studio 5 by patching two computers together in the computer lab. I originally had booked the DAT recorder for Tuesday but couldn’t find Peter to actually get it on the day. I thought the patching would be pretty convenient, but unfortunately there was a horrible buzz introduced to the signal. All I did was plug into the headphone jack of the computer running MetaSynth into the Mbox on the other computer recording into Pro Tools. I did get some strange looks from other students when they saw what I was doing.

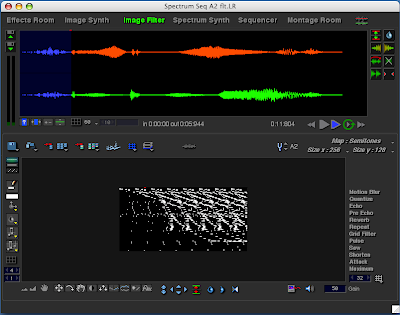

This time I got around the full bookings of Studio 5 by patching two computers together in the computer lab. I originally had booked the DAT recorder for Tuesday but couldn’t find Peter to actually get it on the day. I thought the patching would be pretty convenient, but unfortunately there was a horrible buzz introduced to the signal. All I did was plug into the headphone jack of the computer running MetaSynth into the Mbox on the other computer recording into Pro Tools. I did get some strange looks from other students when they saw what I was doing.First up, I went straight back and did the Soundwave voice from last week. Here it is.

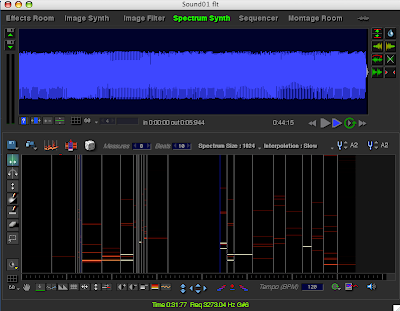

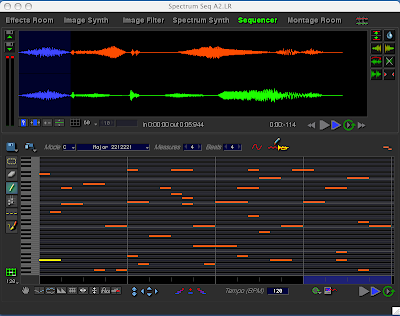

I then went and played around with the other rooms. Image Filter had some cool sounds. I drew some squiggles and played around with the effects on the right column. I rendered sections of my voice as I went. Spectrum Synth was ok. It just seemed to me that all that I had to do was move the columns around to make different lengths of ‘ambient sections.’ The Sequencer was probably the best as far as actually being able to tell what the processed sound would be, apart from the Effects Room. This created a kind of video game ‘Mario Bros’ style tune with my voice. Montage Room I couldn't figure out in the time I had.

I then went and played around with the other rooms. Image Filter had some cool sounds. I drew some squiggles and played around with the effects on the right column. I rendered sections of my voice as I went. Spectrum Synth was ok. It just seemed to me that all that I had to do was move the columns around to make different lengths of ‘ambient sections.’ The Sequencer was probably the best as far as actually being able to tell what the processed sound would be, apart from the Effects Room. This created a kind of video game ‘Mario Bros’ style tune with my voice. Montage Room I couldn't figure out in the time I had.

It’s pretty amazing that all of these sounds were my voice to begin with. The only thing I’d complain about, and it’s no doubt only because I’m not used to the controls or effects yet, is that most effects seem to be pretty random and I had no real suggestion or idea what it would sound like when I chose an effect. Apart from the first two rooms, it just seems like a lot of trial and error to get the sound you want.

-

-

Haines, Christian. 2007. “Creative Computing.” Seminar presented at the University of Adelaide, 24th May.

Meta Synth. 2006 U&I Software IIc

Meta Synth. 2006 U&I Software IIc

Week 11 - AA1 - Mixing(1)

First up I pulled all the faders down. I then went ahead and made a mono mix, so I centred all the panning. I then went ahead and simply started making a balance of the tracks, starting with the drums and working my way right making sure the next track that became unmuted wasn’t too loud so as not to “chase the tail.”

First up I pulled all the faders down. I then went ahead and made a mono mix, so I centred all the panning. I then went ahead and simply started making a balance of the tracks, starting with the drums and working my way right making sure the next track that became unmuted wasn’t too loud so as not to “chase the tail.”I then made a mix with panning. I starting by alt+click to zero all the faders so as to hear all the tracks at once. I then started panning (without adjusting levels at all) listening for where the sound appeared clearest the most. I then adjusted the levels to balance the tracks.

For the third version I used eq to clean up the sounds. I used HPF and LPF and a bit of parametric to boost a bit of some of the tracks here and there. There was no eq boost at all on the vocals but there seems to be a lot of sibilance. Since a de-esser is technically compression, I left the sibilance alone. I assume we’ll need to address this in the next mix though.

-

This exercise was good, but at the same time I feel it was a waste of time simply for the fact that everyone (apart from those living under rocks) has heard this song before and already has “the way this song should sound” already stuck in their head. It was very difficult to get away from that. Speaking of which, I wonder if anyone actually used this song as their reference?!? I know songs get remixed all the time, but we weren’t asked to do that. We were simply asked to balance the tracks. It would have been better if we say, had to mix a song a third year had recorded. As long as it wasn’t anyone famous it would be pretty much a given that we wouldn’t have heard the song before. Hmmm, are we going to be mixing the NIN track as well?

- (mono)

- (with panning)

- (with panning and eq)

-

Fieldhouse, Steve. 2007. “Audio Arts 1 Seminar – Drums.” Seminar presented at the University of Adelaide, 15th May.

Digidesign. 1996-2007 Avid Technology, Inc All Rights Reserved.

Eskimo Joe. 2006. “New York” Black Fingernails, Red Wine (album). Mushroom Records.

Friday 18 May 2007

Week 10 - Forum - Construction and Deconstruction (1)

After all the ramblings of hit formula and psycho acoustics,one thing is definite. I’m no public speaker.

After all the ramblings of hit formula and psycho acoustics,one thing is definite. I’m no public speaker.Everything resonates. It’s the simple fact of the universe, but can we willfully affect that resonance to basically “do our bidding?” Can every number 1 song be deconstructed and stripped of all their dissimilar aspects to only leave the exact same elements remaining for every single song? I would recommend reading the chapter (Well the whole book really. Parts of it is in our readings) in “Mixing With Your Mind” that I refered to today as Stav says it better than I did. What I hoped to achieve w

as to take it one step further and see if his concept could be applied to discover the ‘secret ingredients’ that go into other genres of music for film scoring and soundtracks. Namely the horror genre.

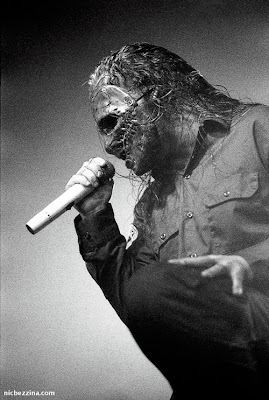

as to take it one step further and see if his concept could be applied to discover the ‘secret ingredients’ that go into other genres of music for film scoring and soundtracks. Namely the horror genre.Some people may have misinterpreted my intention for the Slipknot video (which is my fault for the poor presentation). The purpose of the video was to demonstrate fear in music, whether it could be manufactured/constructed at will and the bodily reactions one has without even realising it, not whether someone would buy an album based on seeing what the artists look like or a debate on whether Slipknot is scary. Slipk

not was the best reference I had since I had the video and the album and could do an easy A B comparison with and without visual stimulus. Even though a horror film tends to build tension and suspense in the music and doesn’t usually just jump out at you at the start like a metal concert, it served the purpose of the experiment by seeing the various reactions such as squirming, staring in disbelief, rapid eye blinking and laughter but in hindsight it may have been better to use a film such as the remake of The Texas Chainsaw Massacre. Presented once with the footage, then with the lens covered so as just to listen to the soundtrack (a dark musical section, not one filled with screaming and a howling chainsaw). Unfortunately I don’t have that video. It is a known fact that famous record producers use formulas. It is also a known fact that marketing gurus use formulas in advertising to sell us their products. Formulas are used everywhere where a buck is to be made. Ignore it if you will, but we are either the manipulators or the manipulated. Anyone that doesn't believe this is a naive fool.

not was the best reference I had since I had the video and the album and could do an easy A B comparison with and without visual stimulus. Even though a horror film tends to build tension and suspense in the music and doesn’t usually just jump out at you at the start like a metal concert, it served the purpose of the experiment by seeing the various reactions such as squirming, staring in disbelief, rapid eye blinking and laughter but in hindsight it may have been better to use a film such as the remake of The Texas Chainsaw Massacre. Presented once with the footage, then with the lens covered so as just to listen to the soundtrack (a dark musical section, not one filled with screaming and a howling chainsaw). Unfortunately I don’t have that video. It is a known fact that famous record producers use formulas. It is also a known fact that marketing gurus use formulas in advertising to sell us their products. Formulas are used everywhere where a buck is to be made. Ignore it if you will, but we are either the manipulators or the manipulated. Anyone that doesn't believe this is a naive fool. -

The other two presenters also basically spoke about “deconstructing” a piece of work. Dragos spoke of the addition and subtraction of loops in songs while Matthew talked us through the working processes of a piece he worked on. I have a funny feeling we will be in for more of this sort of theme in next weeks forum. I hope I’m wrong and there are more lateral thinkers out there.

Whittington, Stephen. 2007. Forum Workshop “Construction and Deconstruction in Music(1)”. Forum presented at the University of Adelaide, 17th May.

May, Frederick. 2007. Forum Workshop “Construction and Deconstruction in Music(1)”. Forum presented at the University of Adelaide, 17th May.

Nastasie, Dragos. 2007. Forum Workshop “Construction and Deconstruction in Music(1)”. Forum presented at the University of Adelaide, 17th May.

The other two presenters also basically spoke about “deconstructing” a piece of work. Dragos spoke of the addition and subtraction of loops in songs while Matthew talked us through the working processes of a piece he worked on. I have a funny feeling we will be in for more of this sort of theme in next weeks forum. I hope I’m wrong and there are more lateral thinkers out there.

Whittington, Stephen. 2007. Forum Workshop “Construction and Deconstruction in Music(1)”. Forum presented at the University of Adelaide, 17th May.

May, Frederick. 2007. Forum Workshop “Construction and Deconstruction in Music(1)”. Forum presented at the University of Adelaide, 17th May.

Nastasie, Dragos. 2007. Forum Workshop “Construction and Deconstruction in Music(1)”. Forum presented at the University of Adelaide, 17th May.

Mazzone, Matthew. 2007. Forum Workshop “Construction and Deconstruction in Music(1)”. Forum presented at the University of Adelaide, 17th May.

Week 10 - CC1 - Meta (1)

Meta Synth seemed pretty straight forward. After seeing the demonstration in class I just had to make my voice imitate Soundwave from the 1980’s cartoon The Transformers. I experimented with the pitch shifter and the harmonizer to do it. It sounded pretty cool, but then I remembered we couldn’t save the file with the version in the computer lab and all the studio time is used up. Grrrrr. “Rumble, eject. Operation destruction.”

Meta Synth seemed pretty straight forward. After seeing the demonstration in class I just had to make my voice imitate Soundwave from the 1980’s cartoon The Transformers. I experimented with the pitch shifter and the harmonizer to do it. It sounded pretty cool, but then I remembered we couldn’t save the file with the version in the computer lab and all the studio time is used up. Grrrrr. “Rumble, eject. Operation destruction.” -

Haines, Christian. 2007. “Creative Computing.” Seminar presented at the University of Adelaide, 17th May.

Meta Synth. 2006 U&I Software IIc

Picture taken from the DVD boxset "Transformers – volume 1" Madman Entertainment, 2003.

Week 10 - AA1 - Drums

Drums are the instruments I enjoy recording the most. This will be the sixth time I've recorded a drumkit and it's still exciting. This drum recording is taken from my main AA1 recording project that was done on Saturday. It’s a standard drum kit. Kick, snare, r1, r2, floor, hihat, ride and a cymbal. The session plan for the project was used but I did add the KM84’s for ride and hihat. After Bradley helped set the mics up in their approximate location, I set about getting more fussier as I started to pull a sound. First

time I've recorded a drumkit and it's still exciting. This drum recording is taken from my main AA1 recording project that was done on Saturday. It’s a standard drum kit. Kick, snare, r1, r2, floor, hihat, ride and a cymbal. The session plan for the project was used but I did add the KM84’s for ride and hihat. After Bradley helped set the mics up in their approximate location, I set about getting more fussier as I started to pull a sound. First  up I wanted to set the overheads. As I listened in the control room with headphones, Bradley moved the U87 up and down and across the floor tom for me as I listened for optimum resonance. The second overhead was placed by my listening again in headphones to get the snare in the middle of the stereo field. I chose the U87's because they seem more rounder and less bright/splashier on the cymbals than smaller diaphragm condensers I've used in the past.

up I wanted to set the overheads. As I listened in the control room with headphones, Bradley moved the U87 up and down and across the floor tom for me as I listened for optimum resonance. The second overhead was placed by my listening again in headphones to get the snare in the middle of the stereo field. I chose the U87's because they seem more rounder and less bright/splashier on the cymbals than smaller diaphragm condensers I've used in the past.

I had the NTV on the kick originally, but then changed to the U89i simply because I thought it sounded better. I didn’t want a “clicky” sound at all and was going for more of a “thump.” I did have the e602 on the inside of the kick though, but that was to get more of a cleaner, tighter sound to blend with the outside mic.

time I've recorded a drumkit and it's still exciting. This drum recording is taken from my main AA1 recording project that was done on Saturday. It’s a standard drum kit. Kick, snare, r1, r2, floor, hihat, ride and a cymbal. The session plan for the project was used but I did add the KM84’s for ride and hihat. After Bradley helped set the mics up in their approximate location, I set about getting more fussier as I started to pull a sound. First

time I've recorded a drumkit and it's still exciting. This drum recording is taken from my main AA1 recording project that was done on Saturday. It’s a standard drum kit. Kick, snare, r1, r2, floor, hihat, ride and a cymbal. The session plan for the project was used but I did add the KM84’s for ride and hihat. After Bradley helped set the mics up in their approximate location, I set about getting more fussier as I started to pull a sound. First  up I wanted to set the overheads. As I listened in the control room with headphones, Bradley moved the U87 up and down and across the floor tom for me as I listened for optimum resonance. The second overhead was placed by my listening again in headphones to get the snare in the middle of the stereo field. I chose the U87's because they seem more rounder and less bright/splashier on the cymbals than smaller diaphragm condensers I've used in the past.

up I wanted to set the overheads. As I listened in the control room with headphones, Bradley moved the U87 up and down and across the floor tom for me as I listened for optimum resonance. The second overhead was placed by my listening again in headphones to get the snare in the middle of the stereo field. I chose the U87's because they seem more rounder and less bright/splashier on the cymbals than smaller diaphragm condensers I've used in the past.I had the NTV on the kick originally, but then changed to the U89i simply because I thought it sounded better. I didn’t want a “clicky” sound at all and was going for more of a “thump.” I did have the e602 on the inside of the kick though, but that was to get more of a cleaner, tighter sound to blend with the outside mic.

The snare had the MZ-204. This was ok, but I perhaps should have changed the mic as there is a slight ring and it sounds a little boxy and thin.

The MD421’s are fantastic. This is the first time I’ve had the opportunity to use them on toms. Out of necessity, I used the Beta 52 on the floor tom. It just fit between the ride and rack 2.I used AKG 414’s set to omni for room mics. They were down low to the ground ab out ten feet away pointing at the kick. I set myself a challenge of not using eq during recording or mixing, so this is the raw sound as is.

out ten feet away pointing at the kick. I set myself a challenge of not using eq during recording or mixing, so this is the raw sound as is.

The MD421’s are fantastic. This is the first time I’ve had the opportunity to use them on toms. Out of necessity, I used the Beta 52 on the floor tom. It just fit between the ride and rack 2.I used AKG 414’s set to omni for room mics. They were down low to the ground ab

out ten feet away pointing at the kick. I set myself a challenge of not using eq during recording or mixing, so this is the raw sound as is.

out ten feet away pointing at the kick. I set myself a challenge of not using eq during recording or mixing, so this is the raw sound as is.Drum recording 1: This is the full kit, all mics.

Drum recording 2: This is a minimal sound with more ambience. Only the snare, inside kick mic, the overheads and the room mics were used.

Drum recording 2: This is a minimal sound with more ambience. Only the snare, inside kick mic, the overheads and the room mics were used.

-

Fieldhouse, Steve. 2007. “Audio Arts 1 Seminar – Drums.” Seminar presented at the University of Adelaide, 15th May.

Fieldhouse, Steve. 2007. “Audio Arts 1 Seminar – Drums.” Seminar presented at the University of Adelaide, 15th May.

Saturday 12 May 2007

Week 9 – Forum – Tristan Louth-Robins - Singing Teapot

I must admit, I was expecting the teapot to sing similar to when a crystal glass sings when you run your finger around the rim. When I thought of resonating teapots, I had visions of having the teapot being vibrated by an outside frequency by sympathetic resonance (perhaps even by a sub or ultra frequency) and therefore giving off it’s own audible frequency. I was slightly disappointed because I was excited by my expectations, but it was interesting nonetheless.

I’d like to offer my opinion on the subject in case a few simpler ideas were overlooked. In a large room as Alvin Lucier experimented in it wouldn’t have been as big an issue, but forcing the sound back into the teapot (and probably most likely by the appearance of new overtones seen in later screengrabs of Peak) may be creating a pressure mode. A 200Hz wave is around 172cm which the teapot clearly isn’t but playing these frequencies into an area that can't reproduce them properly (and I know the argument will be that the teapot generated this frequency itself, but that frequency was originally recorded outside of the teapot in a room capable of reproducing 200Hz)will cause a buildup of frequencies below that point. They will then start to generate their own harmonics interfering with any sort of 'flat response' there might have been. There could also have been a standing wave present. A sphere is of course the perfect place for standing waves to thrive. Even though the teapot is not a perfect sphere, the widest part horizontally is perfectly round as far as I could tell apart from the area with the spout. Every opposite is exactly the same length on this axis and any resonant frequency would be reinforcing itself. 344/400 (double the length of the suspected standing wave) is 86cm. It just so happens that 5 instances of 17.2 = 86. This alone as far as I'm aware won't create a standing wave as the waveforms have to move past each other, and all this may be stretching things but it would be interesting to see if the inside of the teapot’s measurement was in fact 17.2cm. This would hide the actual resonance of the teapot structure and focus our ears on the resonance of the first standing wave of the teapot.

experimented in it wouldn’t have been as big an issue, but forcing the sound back into the teapot (and probably most likely by the appearance of new overtones seen in later screengrabs of Peak) may be creating a pressure mode. A 200Hz wave is around 172cm which the teapot clearly isn’t but playing these frequencies into an area that can't reproduce them properly (and I know the argument will be that the teapot generated this frequency itself, but that frequency was originally recorded outside of the teapot in a room capable of reproducing 200Hz)will cause a buildup of frequencies below that point. They will then start to generate their own harmonics interfering with any sort of 'flat response' there might have been. There could also have been a standing wave present. A sphere is of course the perfect place for standing waves to thrive. Even though the teapot is not a perfect sphere, the widest part horizontally is perfectly round as far as I could tell apart from the area with the spout. Every opposite is exactly the same length on this axis and any resonant frequency would be reinforcing itself. 344/400 (double the length of the suspected standing wave) is 86cm. It just so happens that 5 instances of 17.2 = 86. This alone as far as I'm aware won't create a standing wave as the waveforms have to move past each other, and all this may be stretching things but it would be interesting to see if the inside of the teapot’s measurement was in fact 17.2cm. This would hide the actual resonance of the teapot structure and focus our ears on the resonance of the first standing wave of the teapot.

I’d like to offer my opinion on the subject in case a few simpler ideas were overlooked. In a large room as Alvin Lucier

experimented in it wouldn’t have been as big an issue, but forcing the sound back into the teapot (and probably most likely by the appearance of new overtones seen in later screengrabs of Peak) may be creating a pressure mode. A 200Hz wave is around 172cm which the teapot clearly isn’t but playing these frequencies into an area that can't reproduce them properly (and I know the argument will be that the teapot generated this frequency itself, but that frequency was originally recorded outside of the teapot in a room capable of reproducing 200Hz)will cause a buildup of frequencies below that point. They will then start to generate their own harmonics interfering with any sort of 'flat response' there might have been. There could also have been a standing wave present. A sphere is of course the perfect place for standing waves to thrive. Even though the teapot is not a perfect sphere, the widest part horizontally is perfectly round as far as I could tell apart from the area with the spout. Every opposite is exactly the same length on this axis and any resonant frequency would be reinforcing itself. 344/400 (double the length of the suspected standing wave) is 86cm. It just so happens that 5 instances of 17.2 = 86. This alone as far as I'm aware won't create a standing wave as the waveforms have to move past each other, and all this may be stretching things but it would be interesting to see if the inside of the teapot’s measurement was in fact 17.2cm. This would hide the actual resonance of the teapot structure and focus our ears on the resonance of the first standing wave of the teapot.

experimented in it wouldn’t have been as big an issue, but forcing the sound back into the teapot (and probably most likely by the appearance of new overtones seen in later screengrabs of Peak) may be creating a pressure mode. A 200Hz wave is around 172cm which the teapot clearly isn’t but playing these frequencies into an area that can't reproduce them properly (and I know the argument will be that the teapot generated this frequency itself, but that frequency was originally recorded outside of the teapot in a room capable of reproducing 200Hz)will cause a buildup of frequencies below that point. They will then start to generate their own harmonics interfering with any sort of 'flat response' there might have been. There could also have been a standing wave present. A sphere is of course the perfect place for standing waves to thrive. Even though the teapot is not a perfect sphere, the widest part horizontally is perfectly round as far as I could tell apart from the area with the spout. Every opposite is exactly the same length on this axis and any resonant frequency would be reinforcing itself. 344/400 (double the length of the suspected standing wave) is 86cm. It just so happens that 5 instances of 17.2 = 86. This alone as far as I'm aware won't create a standing wave as the waveforms have to move past each other, and all this may be stretching things but it would be interesting to see if the inside of the teapot’s measurement was in fact 17.2cm. This would hide the actual resonance of the teapot structure and focus our ears on the resonance of the first standing wave of the teapot. Noting the volume and keeping future passes through the teapot at that particular volume level would create a constance that would eliminate amplifying the standing wave and avoiding a pressure mode altogether allowing the true resonance of the teapot to sing. Come to think of it that may work nicely. If the original recording from the teapot was at say 20dB (or whatever), then play it back into the teapot at –3dB from that level. That way the original resonance is added to perfectly. The progress could be checked at intervals by amplifying the results so they could be heard at a decent level, but at least it should be free of artifacts and be a true resonance of the teapot. There’s also the addition of presence peaks from the mic and the amplifiers THD figures that need considering. Perhaps experiment with different mics, speakers, preamps and amplifiers as the fact that the tone landed exactly on 200 Hz seems odd to me. It’s not 197.3 or 204.7 for example but smack bang on 200Hz. Eliminating rogue frequencies would give a more acurate result. All this is from a slightly scientific point of view and if the whole aim is to simply make an instrument out of it then I’d say it works nicely as is.

Whittington, Stephen. 2007. Forum Workshop “Still and Moving Lines”. Forum presented at the University of Adelaide, 10th May.

Louth-Robins, Tristan. 2007. Forum Workshop “Still and Moving Lines”. Forum presented at the University of Adelaide, 10th May.

Week 9 – CC1 – Desktop Music Environment(3)

This time around I made a couple of loops that I could trigger in reason instead of random noises. I thought I’d have a go at making a rhythmic ‘song.’ Well, a song of sorts.

This time around I made a couple of loops that I could trigger in reason instead of random noises. I thought I’d have a go at making a rhythmic ‘song.’ Well, a song of sorts.There’s a really long drone that was made from the word “thereafter”, well bits of it since Tab to transient cuts off a little at the start. This is a really cool sound. It’s almost like the sound from the aliens in War of the Worlds. It was made from simply time stretching the word to around 7 seconds. I then normalised it to 95% and went to add reverb. It’s a mono track and I felt it didn’t have enough space to it so doubled the track and applied some chorus to one. I put both on a stereo track and then applied the reverb via an Audiosuite plugin.

I did the gate trick whereby ‘triggering’ a sound by using the Key Input on the gate. The gate was placed across a long droning sound that was phasing. A small transient from another sample was used as the trigger.

There were seven sounds in all when I finished bouncing the parts in Pro Tools. These were imported into Reason and placed in the key zones that were within easy reach on the keyboard. The main rhythm sounds all had a C as their trigger key (but I did have a whole octave for each rhythm sound) to keep them in tune, but I did try hitting other keys within that octave to try different combinations and syncopations. Some worked, some didn’t. Although it’s not entirely in time properly, I am happy with the progress I am making.

-

Finished Soundscape. (Databus won't let me upload for some reason. The file is on this page at the bottom labelled Reason2.)

(Databus won't let me upload for some reason. The file is on this page at the bottom labelled Reason2.)-

Haines, Christian. 2007. “Creative Computing.” Seminar presented at the University of Adelaide, 10th May.Digidesign. 1996-2007 Avid Technology, Inc All Rights Reserved.

Reason. 2007 Propellerhead Software, Inc All Rights Reserved.

Week 9 – AA1 – Bass Guitar Recording

All of these recordings have had compression and eq applied.

Bass1

This was recorded with a 414 about a metre away. Although there's a bit of fret buzz, it has nice transients and is a solid sound. I'd use this sort of sound on 'new metal' or a recording for funk band as I'd imagine it would sound quite good if it was a slap style of playing.

Bass2

I moved the mic a bit closer this time. It sounds cleaner in the higher frequencies while remaining solid in the lower frequencies. It seems a little rounder to my ears, more fuller. I do like this sound better than the first, but it could really only be judged by putting it in a mix.

This was recorded with a 414 about a metre away. Although there's a bit of fret buzz, it has nice transients and is a solid sound. I'd use this sort of sound on 'new metal' or a recording for funk band as I'd imagine it would sound quite good if it was a slap style of playing.

Bass2

I moved the mic a bit closer this time. It sounds cleaner in the higher frequencies while remaining solid in the lower frequencies. It seems a little rounder to my ears, more fuller. I do like this sound better than the first, but it could really only be judged by putting it in a mix.

Bass3

This was miced with the SM56 aimed at the centre of the speaker. This doesn't seem to have the same top end edge as the first two and seems a little thinner sounding.

Bass4

This is the Behringer DI recording. This is the most 'thinnest' sounding. I wouldn't use this alone in a mix. It would be blended with an amp sound.

Bass5

This is the Sm56 and the DI signal combined. They were time aligned. The difference is noticable as the two separate waveforms have different timbral qualities, but when added together combined well and add a new depth and power to the sound. Kind of reminds me of the Faith No More bass sound.

Bass6

This is the 414 and DI signal combined. For this example I decided to automate the phase button on an EQ (which was left flat) to demonstrate the difference phase has on the power of a sound. Although the signal became out of phase, the bottom end was deeper and more powerfull. It definitely sounds better out of phase.

This is the 414 and DI signal combined. For this example I decided to automate the phase button on an EQ (which was left flat) to demonstrate the difference phase has on the power of a sound. Although the signal became out of phase, the bottom end was deeper and more powerfull. It definitely sounds better out of phase.

-

Fieldhouse, Steve. 2007. “Audio Arts 1 Seminar – Bass Guitar.” Seminar presented at the University of Adelaide, 8th May.

Digidesign. 1996-2007 Avid Technology, Inc All Rights Reserved.

Digidesign. 1996-2007 Avid Technology, Inc All Rights Reserved.

Sunday 6 May 2007

Week 8 - Forum - Gender and Music Technology (2)

It was refreshing to see that this week everyone steered clear of the usual stereotypes and concentrated on the music. The dehumanised sound of the band Kraftwerk was, in Bradley’s words “de-gendered.” I tend to agree as it had odd quirky lyrics that were sung with odd effects taking the male/female sound out of it. Speaking of perspectives from a gender point of view, the issue of cyborgism was raised. I find this topic fascinating as there are so many programs coming out that can make music from very little input from the operator. Just scan a photo or type in some letters and the program does the rest. Talk about completely removing gender! Yes, these ‘songs’ are usually of a low standard compared to a human composer, but nevertheless it’s a step forward. Computers such as ENIAC could barely add two and two together a few decades ago. Which brings me to the Matrix. Who would have thought I would have gotten such visual images popping in my head of ‘penetration’ at a forum. I’ve never thought of the Matrix like that, and whether I agree or disagree, it did force me think further outside the square which is always a good thing. I wonder how Trinity fits into it all. Maybe I'll leave that one alone.

For intermission we were treated to a viewing of the Bohemian Rhapsody Show and then it was back to the forum.

There is sometimes cultural stereotyping that should be considered as Edward pointed out. I remember when I was at high school and the teachers would be sorting out the work experience week. Students would be stuck in jobs that the teachers thought they would be best suited for. Shouldn’t the student choose? This was a long time ago so I hope they sort it out differently these days. Although, it is probably done on a computer which no doubt brings some scary results.

Whether we see a penis when we look at a microphone advertisement or not is always going to be subjected to opinion. The same applies to whether a woman (or a man for that matter) can do a particular job or is even accepted by their peers. Unfortunately opinion in a persons ability is usually the deciding factor. Not their actual ability.

Whittington, Stephen. 2007. Forum Workshop “Can You Tell the Difference? - Gender in Music technology”. Forum presented at the University of Adelaide, 3rd May.

Harris, David. 2007. Forum Workshop “Can You Tell the Difference? - Gender in Music technology”. Forum presented at the University of Adelaide, 3rd May.

Leffler, Bradley. 2007. Forum Workshop “Can You Tell the Difference? - Gender in Music technology”. Forum presented at the University of Adelaide, 3rd May.

Gadd, Laura. 2007. Forum Workshop “Can You Tell the Difference? - Gender in Music technology”. Forum presented at the University of Adelaide, 3rd May.

Cakebread, Ben. 2007. Forum Workshop “Can You Tell the Difference? - Gender in Music technology”. Forum presented at the University of Adelaide, 3rd May.

Kelly, Edward. Forum Workshop “Can You Tell the Difference? - Gender in Music technology”. Forum presented at the University of Adelaide, 3rd May.

Week 8 - AA1 - Electric Guitar

Guitar1: Sennheiser 421 off axis pointing towards edge of cones dust cover, 5cm away from edge and 5 cm away from front.

Guitar2: Neumann U87 off axis pointing towards edge of dust cover, 5cm away from edge and 6cm away from front. This had a few various positions as on axis, further away, etc but often it sounded boxy.

This is the two guitars mixed together. It's pretty ordinary. I haven't picked up a guitar in about a year and it shows.

Guitar3: This is (yes the same two mics) set up in XY about 10cm away from directly in front dead centre.

I was going to add the next part of the Metallica song but got utterly frustrated with the sound. Perhaps I should have recorded the crappy solid state noise to make up the numbers for this assignment, but to be honest all I got was a headache from going back and forth to the dead room moving the mic and fiddling with amp settings. Even if I did record the sound, it was completely different than what was coming out of the amp. I recorded with no eq or compression and the amp had it's treble and mids rolled completely out, but what was coming through the monitors was a brittle, bright, edgy and distorted 'cheap' sound. I had plans of recording a man's amp over the weekend, but unfortunately I didn't get a quiet moment alone at home to set mine up.

Guitar2: Neumann U87 off axis pointing towards edge of dust cover, 5cm away from edge and 6cm away from front. This had a few various positions as on axis, further away, etc but often it sounded boxy.

This is the two guitars mixed together. It's pretty ordinary. I haven't picked up a guitar in about a year and it shows.

Guitar3: This is (yes the same two mics) set up in XY about 10cm away from directly in front dead centre.

I was going to add the next part of the Metallica song but got utterly frustrated with the sound. Perhaps I should have recorded the crappy solid state noise to make up the numbers for this assignment, but to be honest all I got was a headache from going back and forth to the dead room moving the mic and fiddling with amp settings. Even if I did record the sound, it was completely different than what was coming out of the amp. I recorded with no eq or compression and the amp had it's treble and mids rolled completely out, but what was coming through the monitors was a brittle, bright, edgy and distorted 'cheap' sound. I had plans of recording a man's amp over the weekend, but unfortunately I didn't get a quiet moment alone at home to set mine up.

-

Fieldhouse, Steve. 2007. “Audio Arts 1 Seminar – Electric Guitar.” Seminar presented at the University of Adelaide, 1 May.

Week 8 - CC1 - Desktop Music Environment (2)

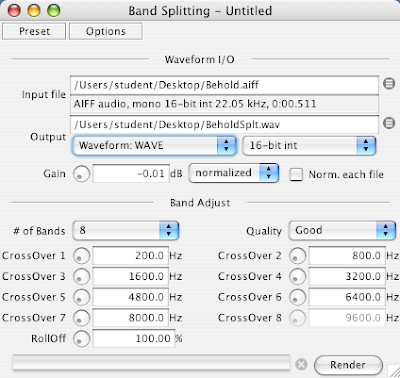

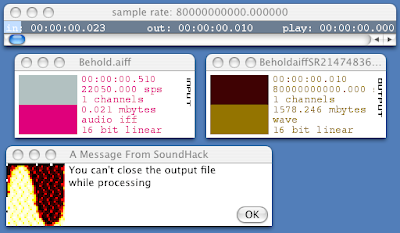

Well after last weeks debacle working with Reason, I figured I’d better sort this ‘key zone’ issue out properly. Yay! It seems I have sorted it out, but before we get into that there’s some sound manipulation in order on Fscape and SoundHack. I used the band splitting plugin in to split the word Behold into 8 parts and processed the full sentence in SoundHack with the phaser vocoder plugin, then split that into 4 parts with the band splitter. I went back to play around with it some more today, and chose some extreme settings. I think they were a little too extreme as the file size went b

Well after last weeks debacle working with Reason, I figured I’d better sort this ‘key zone’ issue out properly. Yay! It seems I have sorted it out, but before we get into that there’s some sound manipulation in order on Fscape and SoundHack. I used the band splitting plugin in to split the word Behold into 8 parts and processed the full sentence in SoundHack with the phaser vocoder plugin, then split that into 4 parts with the band splitter. I went back to play around with it some more today, and chose some extreme settings. I think they were a little too extreme as the file size went b alistic. I found it strange that there is no cancel option to stop a process, but since it is an early audio processor it is easy to forgive. I let it get to over a Gig before I quit the whole program down to stop it.

alistic. I found it strange that there is no cancel option to stop a process, but since it is an early audio processor it is easy to forgive. I let it get to over a Gig before I quit the whole program down to stop it. As I mentioned earlier I have figured out the key zones and placed my sounds together where they would be convenient to play and give the right pitches I was after. I’m still no keyboard player but I am much happier with the results this week and am getting more confident with Reason. After todays Perspectives lesson, I think it would be a good task for me to figure out how to assign different sounds to velocity changes.

Finished Soundscape.

Haines, Christian. 2007. “Creative Computing.” Seminar presented at the University of Adelaide, 2nd May.

Reason. 2007 Propellerhead Software, Inc All Rights Reserved.

Subscribe to:

Posts (Atom)